Pain / Gain

- Problem: HPC places utmost importance on application performance, and optimisation generally involves manual profiling and tuning of applications to suit target hardware. Additionally, the optimisation process is not portable and needs to be repeated when moving to other HPC systems. The wide variety of cloud targets with hundreds of different server configurations provides flexibility but lacks the control and performance of HPC systems. In a software-defined infrastructure, automating the optimisation of application deployments for heterogeneous targets remains an unsolved problem. Container virtualization has fastened the convergence of HPC and cloud due to its ease of use, portability, scalability, and the advancement of user-friendly runtimes. MODAK addresses the problem of enabling application experts with limited hardware or optimisation knowledge to use diverse targets in an optimal way. When there are many deployment options for the individual components of an application, how to select the best or appropriate set of deployment options for a given context (workload range). At the node level, it is hard to manage heterogeneous resources in order to fulfil QoS requirements at runtime.

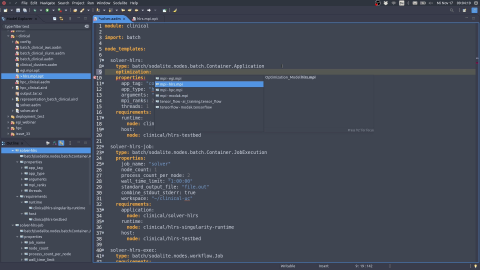

- Solution: Application performance optimization is highly dependent on the application, its configuration, and the infrastructure. The data scientist, or AoE, selects application optimisations using the SODALITE IDE. Optimisations include changes to the application configuration, the environment, or the runtime. Application runtime parameters can be further autotuned for improved application performance. In order to apply the optimisations, MODAK requires that application code be written in a standard high-level API, along with the application inputs and configuration. This enables the Optimiser to make performance decisions based on the available target (based on performance modelling). The Optimiser uses the pre-built, optimised containers from the Image Registry and modifies them to build an optimised container for the application deployment. The Application optimiser also makes changes to runtime, deployment, and job scripts for submission to HPC schedulers. Additionally, we will be managing node resources including the overall node capacity/throughput while maintaining the node goals.

- Value: There is a wealth of experience in the manual optimisation of applications in the HPC domain. Automating them for an IaC environment is the main feature of MODAK. Some optimisation involving code modifications cannot be automated and are out of scope. Porting application optimisation to cloud and edge deployments based on HPC optimisation is of great value. Cloud and Edge have different usage patterns and requirements and the optimisation process should be adjusted to cater for the wide diversity and strength of such targets. Refactoring applications for performance are common in Cloud and MODAK aims to transfer this learning to the HPC environment.

Product

- Functionality: This layer provides tools to optimise an application deployment during design (statis) and runtime (runtime). Static optimisation is supported using MODAK. Based on the performance model and the AoE selected optimisations, MODAK maps the optimal application parameters to the infrastructure target and builds an optimised container. Both the static and the dynamic application optimizers are well documented and their ISC-HPC 2020 recorded presentations can serve as demonstrators. The node manager is managing node resources including the overall node capacity/throughput while maintaining the node goals assigned by the Deployment Refactorer.

- Technology: MODAK builds a performance model based on running standard benchmarks on infrastructure targets and then builds a linear statistical model. These models help MODAK to predict the application performance. MODAK focuses on three different application types namely AI Training & Inference, Data Analytics and Traditional HPC applications. WIth support for different architecture and diverse targets, MODAK also supports autotuning for tuning application parameters. On the other hand, the node manager provides a centralized smart load balancing algorithm that schedules applications requests on fast GPUs or CPU according to application needs. For each container, it also deploys a lightweight control theoretical planner that continuously refines the CPU allocation of containers using vertical scalability. Finally, for each machine, it deploys a so-called supervisor that handles resource contention scenarios for concurrent containers executions.

- Status: The MODAK package, a software-defined optimisation framework for containerised HPC and AI applications, is the SODALITE component responsible for enabling the static optimisation of applications before deployment. After an initial preparation phase of the package during the first year of the project (see [D3.3]), in the second year we have further extended MODAK to support HPC systems cases. In particular, we have prototyped AI training and inference and traditional HPC applications (see [D6.3]). MODAK has been further extended to cover the three User cases and integrated into the SODALITE framework.

What's Unique

- Differentiator: There is no solution in the market that is able to appropriately address static application deployment. Also, the node manager is the first approach that allows for coordinated management of CPUs and GPUs resources for multiple concurrent and containerized applications by combining smart scheduling of requests and fine-grained vertical scalability of resources.

- Innovation: For deploying commercial applications for Climate modelling, Material Science, there is a wealth of expertise on how these applications perform, how they can be optimised by adding resources and how they can scale across infrastructures. For HPC applications, it can be very difficult to determine this without deep application knowledge and profiling expertise is often required. We are attempting a minimal and novel approach that can be used by an expert without having to undertake an exhaustive study of applications. This can be expanded further to cater for additional knowledge, profiling data or autotuning. Applying these techniques to AI and Big Data deployments.

- Partnerships: This layer is led by a strong industrial partner, HPE, in collaboration with a strong research partner, POLIMI, with the support of other members of the consortium.